Hi there! Nice to meet you.

I bring a philosophical and qualitative lens to socio-technical areas like ML evaluation, auditing, risk assessment, red teaming, and vulnerability management. I am an officer at ARVA, the nonprofit home of the AI Vulnerability Database (AVID), an affiliate at Data & Society, and a senior consultant at BABL AI. I’m also grateful to be a recipient of the 2023-2024 Magic Grant from The Brown Institute for Media Innovation.

Lately

At ARVA, I want to help co-create a thriving ecosystem of knowledge sharing across the countless groups striving to make AI less harmful. I lead our research on the potential of red-teaming for empowering communities to recognize and prevent generative AI harms, in partnership with Data & Society’s AI on the Ground team. I also co-lead our work on standards for discovering and disclosing harmful flaws in AI systems, and coordinate our efforts as a community partner organization for the White House-supported DEF CON 31 Generative Red Team event co-organized by AI Village, Humane Intelligence, and Seed AI.

I founded Accountability Case Labs, an open community dedicated to participatory approaches to challenges in the algorithmic accountability and governance space.

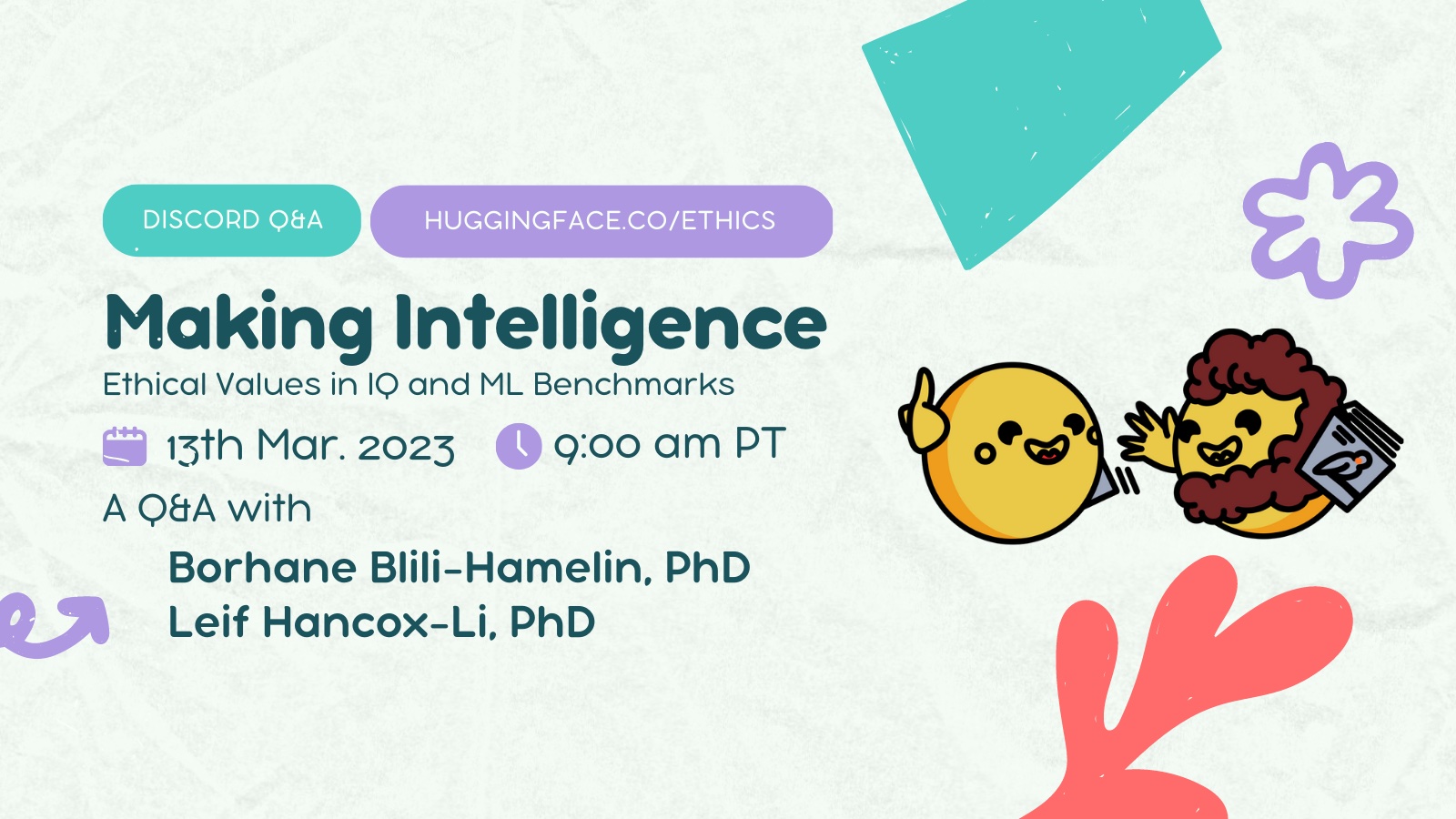

I’m fascinated by the ML research community’s approaches to self-governance, through both scholarly self-examination and processes like ethics review. My FAccT 2023 paper, co-authored with Leif Hancox-Li, examines overlooked structural similarities between IQ and ML benchmarks. This enables us to unlock lessons from feminist philosophy of science scholarship that need to be considered by the ML benchmark community. Building upon that work, I am collaborating on an investigation of pluralistic and reflectively value-laden correctives for AGI as a way of thinking about progress in AI.

I believe that people with PhDs can have a transformative impact outside of academia. During the pandemic, this led me to collaborate on open workshops, grants, networking events, and open resources aimed at the Post Academic community. I’m currently co-director of Open Post Academics.

I was thrilled to co-design and co-curate Mozilla Festival’s 2022 Rethinking Power and Ethics Space.

I hold a PhD in Philosophy from Columbia University.

In my spare time, I love making musical instruments misbehave.

My native language is French. I’m Québecois and Tunisian. I’m currently based in New York City.

My recent projects

Red-Teaming in the Public Interest

Ongoing research project on the potential of red-teaming for empowering communities to recognize and prevent generative AI harms

Read moreTalks & Workshops

Hugging Face Ethics & Society Q&A

Leif Hancox-Li and I speak about our research on the ethical risks of ML benchmarks

Read moreFeatured categories

workshop (10) Community (3) podcast (3)Borhane Blili-Hamelin, PhD

(he/him) ML Ethics | Consulting | Research | ARVA | AVID | BABL AI

How to say my name